Protohackers is a server programming challenge, where various network protocols are set as a problem. It has started not so long ago, and the No 3. challenge was just released yesterday, aiming at creating a simple (“Budget”) multi-user chat server. I thought I sacrifice a decent part of my weekend give it a honest try. This is the short story of trying, failing, then getting more knowledge out than I’ve expected.

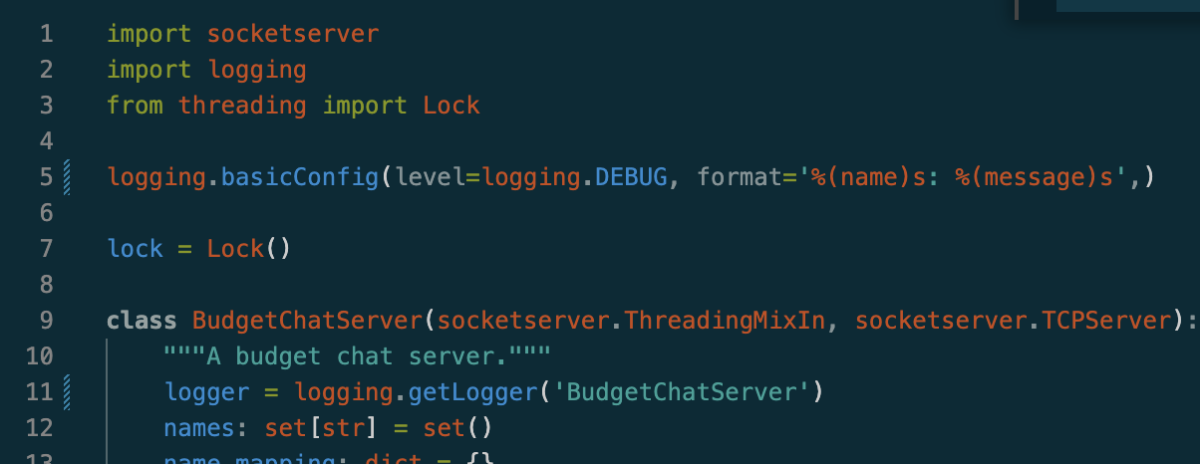

Definitely wanted to tackle it using Python as that’s my current utility language that I want to know most about. Since the aim of Protohackers, I think, is to go from scratch, I set to use only the standard library. With some poking around documentation I ended up choosing SocketServer as the basis of the work. It seemed suitable, but there was a severe dearth of non-dummy code and deeper explanation. In a couple of hours I did make some progress, though, that already felt exciting:

- Figured out (to some extent) the purpose of the server / handler parts in practice

- Made things multi-user with data shared across connections

- Grokked a bit the lifecycle of the requests, but definitely not fully, especially not how disconnections happen.

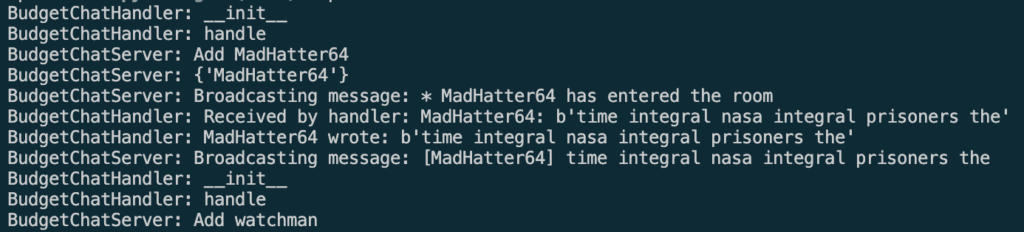

Still it was working to some extent, I could make a server that functioned for a certain definition of “functioned”, as the logs attest: