TL;DR: if you are about to become a GitLab enterprise user, time to split your work from passion.

I’m often asked by other team members just starting on their version control journey, when using the likes of GitHub and GitLab, whether to have separate accounts for work and personal projects, or have a single one for both?

So far my advice has been pretty much along the lines of: “use a single one“, for many reasons, like every service seems to handle email aliases, git+ssh is pain enough with a single account not even multiple, and people generally seem to build their professional and open source contributions under a single persona anyways.

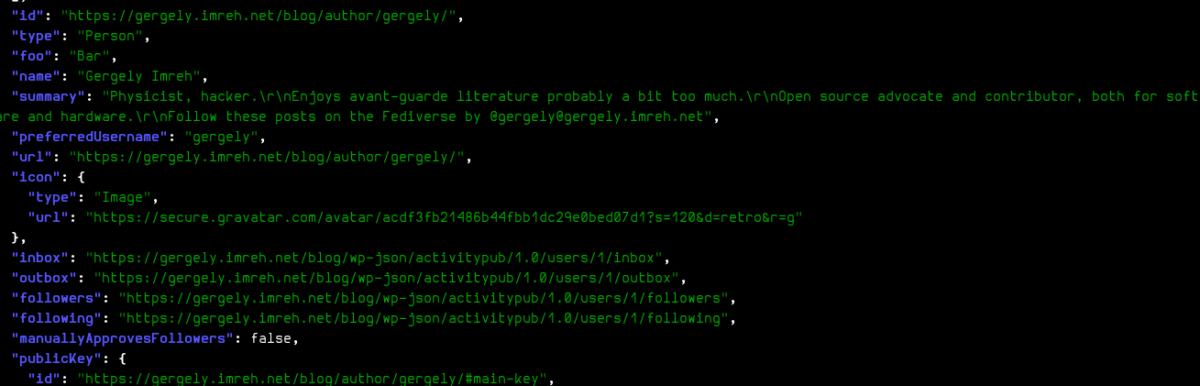

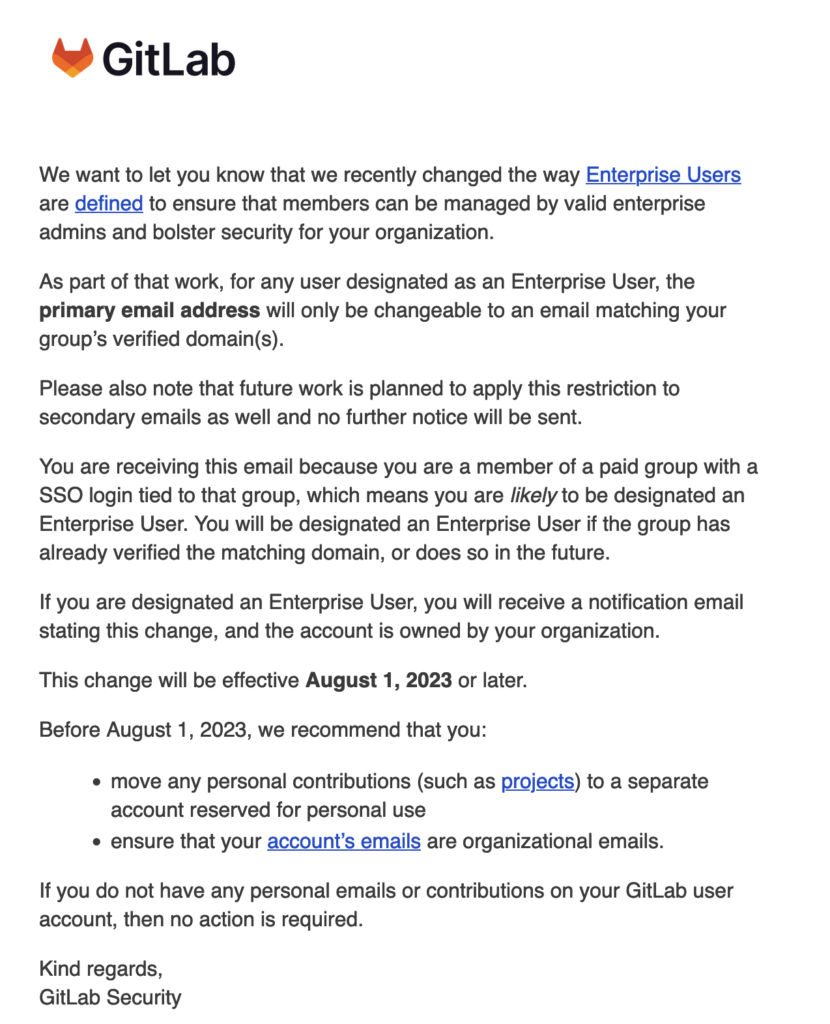

This advice no longer stands, at least for GitLab. I received this email recently, and how their use of Enterprise Users (and SSO Login + domain verification) makes it absolutely necessary to separate work and personal accounts:

I’ve been looking for a blogpost or other announcement, but couldn’t find one, hence the reposting of it here. I definitely gonna scramble a bit to create some new accounts (and keep my preferred username for the personal one).