The World Creativity and Innovation week (WCIW) goes on every year April 15-21 (set to coincide with Leonardo da Vinci’s birthday). This year we were making some events in Taiwan as well, first about creativity: Create @ Public, and another one a week later for innovation: Hack+Taiwan, a hackathon.

Of the two events, this one was the trickier to do. For Create@Public, all I had to do is pack a lot of stationery and start making stuff myself. For a hackathon, there’s much more preparation to do. Fortunately, I had some great mentors to get things going, James of Startup Digest Taipei and Volker from Yushan Ventures. They have a lot of experience pulling off great events, and I was glad to hear their advice. I was surprised that in less than 2 weeks something like this could be put together, even under not totally favorable circumstances.

First had to find a place to host it, and preferably with zero budget. Looking at who do I know, ended up at appWorks, a startup accelerator, whose founder, Jamie is indeed a hacker at heart, so wasn’t actually that bad to convince him to give us some space on a weekend day. Even got one of their teams working there, Fandora, to help us.

That’s a good first step, now have to get some people to participate. I set up the Hack+Taiwan blog (on Octopress, just trying something new, that was an “interesting” experience as well), the event, a sign up sheet and started to spread the word. It all went well, until one week before the event it turned out that the Open Source Developers’ Conference has a hackathon pretty much the same day & time as I planned. That freaked me out a little, and after asking around, I got many different advice: cancel so not to compete with them, cancel to take a rest, and give it my best try nonetheless. Of course the craziest option won, so started to spread the word even more, trying to invite mentors, getting catering, figuring out how the day should work and so on.

Let’s get to it

Since people don’t like to fill out sign up sheets, and Facebook event “join” is so easy, I had absolutely no idea how many people will come. It was just winging it, I knew I’ll be there and a couple of people who were helping me, but that’s all so far.

I ordered breakfast for 30 (from Magic Bagels), and let’s see what happens. Around 9am, the advertised start time, people started to come, first a couple, then some more, and around 10 o’clock there were almost a dozen people. That’s not bad at all, even considering that a third of that was mentors and other organizers, but they took part just the same. Since most people didn’t bring any ideas (some even didn’t bring a laptop, now that I don’t understand), had to get them to come up with some stuff on the spot. It took a little pushing, some ideas were so specific and pretty much the same they are working on their normal time, but fortunately there were some interesting ones. In about half an hour we had 3 teams working on three completely different. In the afternoon there were even more people who dropped by for some time, and got another project working.

The projects we had:

- Mobile reminder app + website

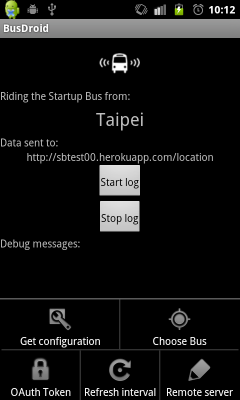

- Android programming (the team had to leave before demo time)

- Visualization of the Taiwanese power grid for monitoring and supervision

- Arduino piano

At 5:30pm we had a demo time, everyone showing off what they made, people switched to speaking Chinese since I was the only foreigner around, so I got to train a little better next time to be able to follow tings. I could see their demos, though, and it’s so impressive how far people got in pretty much 6 and half hours….

Here’s the photo album of what went down. I’ve also taken a timelapse image series of the event, been planning to make a video of it….and then successfully overwritten all the photos with a bad command of ffmpeg. That’s my way.

Lessons learned

- Coming up with ideas is hard

- It is still possible to come up with ideas. Look for issues that kept bugging you for a while, and do something about those

- Everyone gets out different thing from these events

- Don’t force things, everything will work out anyway

- Don’t have to overplan, before one establishes their name in the community as good organizer, it is usually more likely to overestimate how many people will come to an event

- Everyone loves bagels and cake (okay, that’s not new lesson)

- Unless there are enough people, don’t have to focus the topic of a hackathon, see whatever people come up with. More difficult brainstorming, but better chances of success

- Don’t drink too much coffee – I couldn’t get much done from the shakes

I really liked the feedback I had from the participants, and also from other people: there were some who were just working in the background on their own stuff, and when we finished they came and asked what was it, because they’d like to take part next time. That’s the spirit! And we might just do that, for example at the end of the summer. In the meantime, I will try to focus on earning back my own hacker badge.