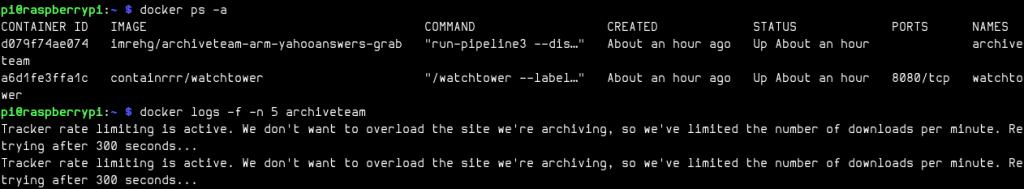

Two weeks ago I came across a thread on Hacker News, linking to an announcement of the shutdown of Yahoo! Answers by early May. One of the early comments pointed people to the Archive Team and their project to archive Yahoo Answers before that 4th May deadline. It looked interesting and I gave their recommended tool, the Archive Team Warrior a spin. It runs in VirtualBox, super easy to set up, lightweight, and all around good. Nevertheless after one night keeping my laptop running and archiving things I was wondering if there was a less wasteful way of doing the archiving. In short order I came across the team’s notes on how to run the archiver as Docker containers. That’s more like it: I have some Raspberry Pi devices running at home anyways, if those could be the archiving clients it would make a lot more sense!

While trying out the instructions, it was quickly clear that the Archive Team provided images out of the box were not working on the Pi. Could they be made to work, though? (Spoiler: it did, and you can check the results on GitHub.)

Container ARM-ification

The issue was that the Archive Team-provided project container, atdr.meo.ws/archiveteam/yahooanswers-grab is only available for amd64 (x64-64) machines. Fortunately the source code is available on GitHub and thus could check out the relevant Dockerfile. It uses a specific base image, atdr.meo.ws/archiveteam/grab-base, whose repo reveals that internally it pulls in atdr.meo.ws/archiveteam/wget-lua containing a modified version of wget from yet another repo (adding Lua scripting support, plus a bunch of other stuff added by Archive Team). But fortunately this is the bottom of the stack. And this bottom of the stack is based on debian:buster-slim which does come built as multiarchitecture images, providing arm64, armv7, armv6 versions, the architectures that are relevant for us if building for the Raspberry Pi (and other ARM boards, since why not).

Thus the challenge is now to rebuild the whole stack of wget-lua → grab-base → yahooanswers-grab images with these extra architectures. This would allow our humble Pi to work, since the main Archive Team payload seems to be Python, only the (forked) wget in there is architecture dependent.

There was a very handy blogpost on the Docker site on how to actually do that using buildx: it’s beautifully straightforward:

- create a “builder” for a set of compatible architectures

- run the build command through buildx with extra flags defining the architectures you need

This simple setup hides a few gotchas, though.

Firstly, when building for multiple architectures, you most likely need the relevant target docker repository set up, as buildx will need to push the images somewhere. They are normally in the “builder” container, and they won’t show up locally in “docker images”.

Secondly, for simplicity all the builds are happening in parallel and for “n” architectures I’ve kinda seen more-than-n-times slowdown (anecdotal, I haven’t measured it:). This slowdown had some unexpected effects, that while my Raspberry Pi 3 running Arch Linux ARM (armv8 or also called arm64) and it could build all 3 relevant architectures listed above, 2 of them together worked fine while 3 of them resulted in various timeouts that prevented the successful finish of the build – after the 2 hours or so it took to build the “wget-lua” image… Not fun.

This needed a change of approach, and started to go down the emulated build path instead, using Docker with QEMU and thus enabling x86 machines to build ARM images. Here I enlisted GitHub actions to have a build architecture remotely, and the Docker Setup QEMU and the Docker Setup Buildx actions. They allowed me to potentially build any of these platforms: linux/amd64,linux/arm64,linux/riscv64,linux/ppc64le,linux/s390x,linux/386,linux/mips64le,linux/mips64,linux/arm/v7,linux/arm/v6

Then the whole thing came down to:

- If the stage uses a base image (in the FROM statement) that has a build by me, replace the archive team image name with my images

- Since some images install Python packages, they might not exists in prebuilt wheel format, thus the Dockerfile need to be patched to install the build requirements for that “pip install” to succeed.

These steps were packaged up in a build script (the link is to the current snapshot, just FYI), and used that to successfully build all images one by one and push to Docker Hub. From here I could set up the archiving task on the Pi finally and start contributing to the scrape again! 🎉

Are we done yet, though? I’ve noticed that once the upstream code for the task changes, my running task just stops doing anything until it was restated with the latest version. The Archive Team uses an extra Watchtower container that checks running containers for newer versions for images, and if found, it will update & restart things. Fortunately Watchtower containers are provided in multi-architecture fashion, so I didn’t need to fork/compile that, I only needed to rebuild the images if the upstream projects have changed…

Keeping things up to date

So let’s rebuild the images, automatically for good measure, so we won’t have to keep an eye on when it is due. But how to best do that?

The current idea I settled on uses Docker image tags to communicate which versions are available and which aren’t (yet).

- when building an image, also push a tag that is the upstream source repo’s commit hash

- when preparing to build next time, check the upstream latest commit hash, and check if a corresponding image exists on Docker Hub? Only build if no such image exists.

This is complicated however by the fact, that we also want to rebuild stuff automatically if not just the last image in the stack has a new commit, but the upstream projects as well! I’ve tried to put together some logic in a Makefile that would handle all this rebuilding in a dependency-aware way, but it stated to become very hairy very quick. So instead settled on Invoke to have some resemblance to make-like tasks, but more flexibly due to Python. With a bit of trial and error I’ve ended up with a crude dependency management and external script calls (git rev-parse for remote repo status, docker manifest for image status, and the build script).

The first build was roughly 1:45h, afterwards with caching it takes 30-60s for the GitHub action to run when it has nothing to build. That’s fast enough to set it on a schedule, and try to rebuild every half an hour or so.

And with this, we are pretty much done, here’s the relevant GitHub repo all packaged up. So far it works pretty decently, and already had one automatic rebuild & update of images this week that I didn’t have to know about.

Future improvements

No programming project is ever really finished, and this one has a lot of room for improvements.

Could add images for more Archive Team projects (ideally all of them). This is complicated by that some of the images have multiple forms (e.g. wget-lua build with openssl or gnutls), and my current build system doesn’t take that into account. I would need to replicate support for those build args and expand on the “have this image been built?” checks to take those into account as well for robustness.

But the more ambitious and likely trickier and better bang for buck task would be folding this work into the official images by the Archive Team. This is likely challenging for a few reasons. The first, easier aspect is that they use another Docker registry (not Docker Hub) and I don’t know whether that supports multiarch images (though it should). The trickier part is that they use Drone as CI/CD tool, and their Docker plugin is not based on buildx (naturally, I guess) nor have they any other plugin that we could use here (unlike GitHub actions). So besides code change also some convincing needed to encourage tool changes. Much higher bar.

Finally, it would be interesting to dig in to the reasons of the slow builds. It seems like the wget-lua image is dead slow in buildx (about 1:20h), and not sure why, when regular build finishes at a fraction of that time. So far some steps that seem to take very long are git submodule init/update, and some of the config steps, I believe, but didn’t look too deep so far. Cutting this build time would help a lot with experimentation, though since the relevant image doesn’t need to be rebuilt often it might be low return on investment.

And this is all… I’d love to hear how did it work for you if you try the these ARM images!

3 replies on “ARM images to help the Archive Team”

Thie Warrior project and Docker files need a LOT of work to update for Raspberry PI OS. I’ve been trying to get it running. I can get the web interface but it’s really not liking the newer version of wget-at available. It’s an absolute absurdity that the team isn’t working on more ARM and especially Pi versions. Many people like me have a ton of these around that could be working idly in the backgroun. — but I’m about to give up. I cannot get it work.

A long time passed since this writeup, and the project I’ve targeted (Yahoo! Answers archive run) is now all in the past.

Which other Archive Team project are you interested in? And what Raspberry Pi hardware to you are using? Would be interesting to see what issues you are running into.

[…] I’ve got an email from a reader of the ARM images to help the Archive Team blogpost from years ago, asking me about refreshing that project to use again. There I was […]