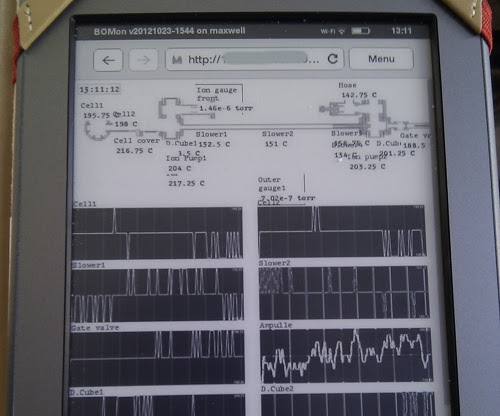

Ever since someone donated an IP phone to the Taipei Hackerspace, I’m trying to find time to set up an internal phone network between the hackerspace members. It should be fun to make our own infrastructure. Recently did some research, and started with it. Since if I get into something then I dive deep for a while, this was an intense week. This post is to summarize where I have got in this time

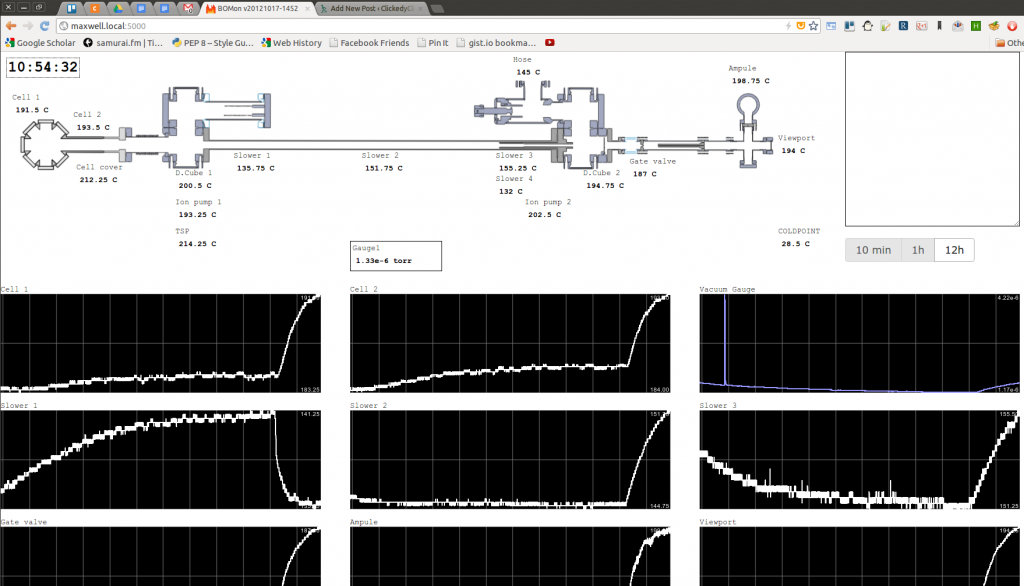

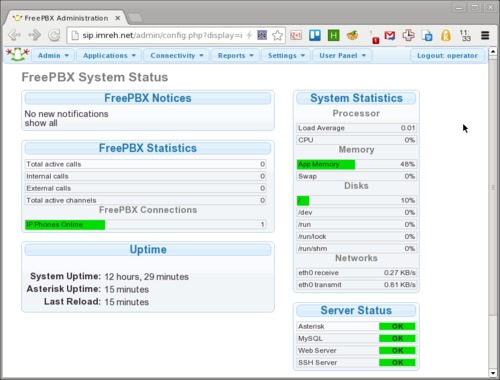

Asterisk & FreePBX

A bit of searching turned up Asterisk, a PBX (“private branch exchange” aka telephone network) software. It looked interesting because it came with a story: a guy building something awesome because he doesn’t know that it was supposed to be difficult. It’s also open source from the start, with a successful company build on top of the project.

Also found, that there’s a graphical control panel called FreePBX that makes using the all-command-line-and-config-files Asterisk easier to use. Both projects had a seemingly very detailed wiki, long track record, and strong following that made it worth checking them out.

The Server

Judging from the original install instructions on the FreePBX wiki, it looked like installing Asterisk & FreePBX is a complex (or rather many-step) process. Didn’t want to litter my own computer with broken installation artefacts, so enter VirtualBox. Using a virtual machine makes it easy to wipe and restart.

There’s a dedicated, preinstalled FreePBX distro based on CentOS, but had enough of CentOS for a while. Instead I just took Ubuntu 12.04.3 as a base, and FreePBX 2.11 and Asterisk 11 from the wiki. The install instructions were clear enough, though occasionally there were small differences needing a fix. Nothing major, but had to play around. After 5-6 reinstalls with increasing experience I got the basic functionality working, calls placed between and such, but the performance and sound quality wasn’t really that good. After thinking what could I improve, decided to take the next step: get out of the virtual machine, and up the version numbers (I’m Arch Linux user with a reason, living on the bleeding edge).

Enter DigitalOcean, a hosting provider that I used for other projects before (cheap, fast with SSD, good service). Set up a machine (aka “droplet”) in their Singaporean center (since that’s probably the closest one to Taiwan). I chose the 1Gb memory instance, because from experience with VirtualBox Asterisk+FreePBX maxed out at around that with a few test accounts.

Upping the version numbers I went with FreePBX 12.0 (from git) and Asterisk 12.1.1 (from download), both are testing versions. Asterisk had an extra dependency of libjansson-dev compared to version 11, didn’t check if any of the earlier dependencies are not required anymore.

Got the whole system working (after a few droplet wipes), and played with the installation with a bit more confidence. From initial experience, Asterisk is a bit like the Linux Kernel. It’s modular, complex, focus on reliability, and the “make menuselect” is a familiar environment after years of “make menuconfig” compiling my own kernel. On the other hand, FreePBX is a bit like WordPress. It has its own auto-updater (just like updating plugins in WordPress), loads and loads of menus, focuses on configuration and tries not to let any faulty module take down the system (found quite a few buggy behaviour, so that’s a good idea). The Kernel and WordPress are two familiar environments, so felt home here too somehow.

Asterisk has a bunch of vocabulary that I’m so far barely familiar with, and lots of functionality that I haven’t had a chance to test yet. FreePBX has a lot of functionality too, and still it’s a bit difficult for me to tell where does an Asterisk function (module, resource?) end and one FreePBX function (plugin?) start. The fact is that I got to feel excited about programmable phone routing (with Lua), fax-to-pdf, hotel style wake-up calls, voicemail recording, call tracing, speaking time, simple conference talks, intercom functionality, regardless from whether it’s a module or a plugin…

Some additional server notes: voicemail requires email out for notification, I set that up with Mandrill and postfix. For such testing it might not be important, but good to secure the server at least a bit with fail2ban and ufw (Uncomplicated Firewall), and probably other things I don’t do well yet. Just sayin’.

Accounts / Extensions

Accounts on the server are the extensions on which someone (or something), a numerical value. The vocabulary and concepts are also new to me, so it took a while to understand how things supposed to interoperate. Asterisk has a bunch of different kinds of extensions, of which I have tried two main ones: SIP and IAX.

SIP

SIP stands for Session Initiation Protocol. As far as I see it is basically a messaging protocol, to set up a connection between two parties, and also provide some other services, for example presence information (Online, Away, Busy….), messaging, and what not. The actual data of the call (voice or video) is trhough RTP (Real-time Transport Protocol).

The voice data in the transmission is compressed with one of the many codecs available:

- ulaw and alaw (G.711) are a pair of the standard codecs, okay quality, one of the basic one to have in any client

- speex is a variable bitrate codec, haven’t used that much

- gsm is lower bitrate, but lower quality too (think of crappy cell phone reception voice)

- G.722 is a hi-def (HD) voice codec, really good! I think beats Skype, and on par with a good Google Hangout quality,

- G.729 is a non-free codec, shows up here and there, but haven’t had a chance to try it, this is the other HD codec that I’ve seen recommended

In testing, this was some of the learning curve, how to set up clients, and also the server that they can communicate with each other. Who choses the codec (caller, callee, server)? How to prioritize the codecs in different clients? What does it look like (or sounds like) when there’s a problem in this area? How to debug and fix?

Asterisk 12 has two different SIP channels or components: their classic library (chan_sip), and a rewritten one (chan_pjsip). The latter one is a standalone library that can be used for other purposes as well. SIP usually works on UDP, while PJSIP can do UDP/TCP/WebSockets too, and feels stable and fast. Definitely would use that if I have to choose between these two. Still, it is in test phase (both in Asterisk and FreePBX), so not without headaches.

There are bunch of different clients that I tried:

On Linux:

- Ekiga is nice, simple, can sign into multiple accounts in multiple networks. Presence information, well integrated into the desktop with notifications and such. Does not seem to be able to handle non-standard SIP ports (which will be an issue further down)

- Linphone is really multiplatform (Linux, Win, OSX, smartphones…), but it was crashing on me quite a bit, doesn’t integrate into the desktop (no notification just sound on call), and can be confusing with the lot of settings (the control panel looks a mess). Can handle non-standard ports too.

- SFLPhone is good, works pretty well, simple, and can do IAX communication besides SIP.

On Android:

- Android actually has full SIP handling capabilities built in for a while now (under “internet phone”). That would be awesome, if there were more information how to set up and use, but in theory a SIP account can be fully integrated into the system.

- CSIPSimple really impressed me, probably the best working client I found. Integrates with the system (calls are handled as ‘calls’ with all the icons, history, and so on), good sound quality (can use the G.722 codec) and so on.

- SipDroid, VIMPhone, LinPhone…. these other clients, don’t even remember them, all of them fell short somehow

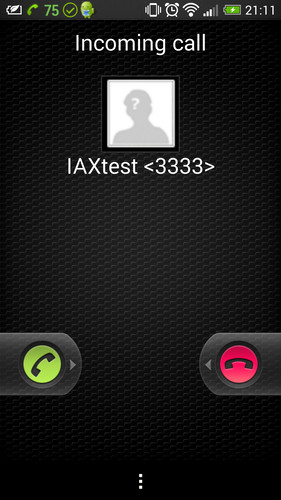

- Zoiper stands out as well, not just because it’s multiplatform, but because it’s pretty much the only one I found that can do both SIP and IAX. The Android system integration is not as close as CSIPSimple, but quite okay.

One of (the many) good thing about SIP that it is well known and pretty well supported. If there’s a “softphone” (phone in software), it’s quite likely to have SIP communication capabilities.

The bad thing about SIP though that it is well known and pretty well resented by the phone service providers. Many of those providers block SIP messages on their network, or sabotage the connection in some other way. On my own cell phone / 3G provider’s network, I couldn’t connect to Asterisk. In the forums some suggested that changing the port number that Asterisk listens on for SIP connections can solve things – and indeed after moving away from 5060/5061 to somewhere else, I could connect. The celebration was short lived, though because even though the calls now can reach the destination, RTP communication (the part that actually transports voice) was still broken. I don’t want to use VPN all the time (though might need to soon), and want to keep moving parts and settings to the minimum if I want others in the Hackerspace to join this network as well, thus SIP looks like a no-go because of the phone companies (darn).

IAX

Looking around, I found another type of channel, using the IAX (Inter-Asterisk eXchange) protocol. The bad thing about it that it is much less supported, but in turn it is not blocked by the phone companies either (since they don’t know about it).

Using SFLPhone and Zoiper I could successfully talk over 3G! Still it is not all good, the devil is in the details.

- It’s good that no need for custom ports

- It’s bad that the IAX channel seems to be more unstable on Asterisk (or maybe I messed up my install after a while?): some extensions have trouble logging on for a while until the server is restarted; the wakeup-calls plugin misbehaved with IAX extension.

- The less support also means less choice in clients. The ones I found cannot do G.722 so no HD voice anymore

- Has a security setting (requirecalltoken) that not all clients support, not sure if there are any implications.

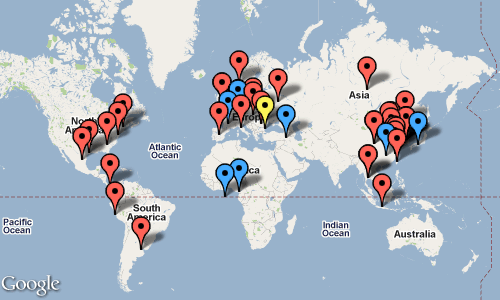

It’s also good, that Asterisk can route incoming SIP calls onto IAX extensions (i.e. the caller doesn’t have to care what technology the callee is using). On the note of routing, I could set things up such that outside calls can be routed into the system. E.g. every hackerspace could have their own Asterisk server and interoperate to call members at other spaces – sounds like a lot of work and might not worth it, but it also sounds awesome.

Summary & Future

I had a lot of fun playing with Asterisk. On the surface phone networks are familiar to everyone, but going deeper both makes things more confusing and opens my eyes how many possibilities there are for making something useful.

There are a lot of things that I thought about, but haven’t tried yet:

- Programmable dialplans (“what happens when a call is received”), via Lua. Lua is an awesome language and probably a lot more large piece of software has it embedded (since that’s one of its strength)

- Could script a lot more too via the Asterisk Gateway Interface (AGI).

- There are a bunch of other protocols and acronyms in Asterisk, for example Secure Real-Time Protocol (SRTP) and ZRTP, that could worth figuring out for a deeper understanding and security

- There’s an Asterisk on Raspberry Pi project that looks interesting (if nothing else then how do they lower the memory usage below the RPi’s <512MB?). Since Asterisk can be used with multiple servers in a network, the RPi can provide one kind of service (e.g. GSM gateway) while other servers with more resources do other stuff

- Using physical phones in the network, for example traditional phone network to come into the server, and IP Phone to ring out. Maybe setting up fax endpoint (and sending it out as PDF or printing it). Basically anything that is working on the threshold between physical and digital.

- Should check out how the likes of Line, Voxer, and Viber are doing VoIP on Android, do they have any interoperability?

- How about Twillio, can their system be a similar PBX on a much larger scale?

The funny thing is that looks like the original IP phone that started this whole adventure does not work with Asterisk. Never mind, it will be good for another project.