Yesterday I’ve read an article that about every topic, one has to know only Two Things.

For every subject, there are really only two things you really need to know. Everything else is the application of those two things, or just not important.

Of course it is a fascinating idea, and I started to think about my profession, physics. If I simplify my experience and knowledge down to this minimal level, what would be the two things I get to? I do think it is not a straightforward stuff, and one can only get to the bottom of this, can find the hidden truth below, if one spends a lot of time with the subject, gets to know it inside and out. I feel that I’m still just scratching the surface of the wast knowledge of the universe (even after being a physicist for about 12 years now). Still this doesn’t stop me from trying.

I’m an experimental physicist, worked in Solid State Physics first, now mostly in Atomic & Laser Physics – all kind of fun stuff. I had very good professors, great inspiration and I have learnt a lot from them. If I think back all the things I’ve learned there are still things that come to me as my first thoughts, and usually those are the right guesses, for whatever intuition I have.

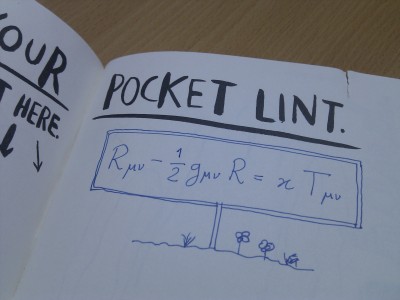

My Two Things about Physics:

- Pure math can take you very far along the way, though in the end still need experiment to see whether the results describe something real.

- Everything is an approximation, but that’s fine. Just pick your approximations carefully.

Maybe it is worth expanding a little bit on that these:

Pure math can be used to describe things extremely well. It’s maybe even too good at that, which got other, much cleverer people to think as well, like a fellow Hungarian physicist, Wigner Jenő (or Eugen Wigner), writing about The Unreasonable Effectiveness of Mathematics in the Natural Sciences. I frequently got myself really excited when after doing some complicated calculation to predict the behaviour of a physical system, the experiment matches up to all of its nuances. Let it be atomic spectroscopy, polarization of light reflected from metallic mirrors, magnetic field of a Zeeman-slower, I had a lot of fun figuring out the physical theories of different phenomena then matching it up with what happens in the lab. Of course, there were loads of times when they didn’t match, but it turns out my math was off. Really, if I want to understand the world, math is one of the most useful and versatile tool to have.

Having that tool is of course not enough. When people come up with new and interesting math trick, it often turns out (to many people’s amusement) that those tricks have some physical meaning, they often also give some insight into the world around us. It’s often, but not always. Mathematics can really easy take one to very strange places and give a result, which is completely aphysical. To complicate things even further, those aphysical results sometimes turn out to be actually correct and predicting real but insofar unobserved things. An example of this is the Dirac equation which gives two solution, one for electron, and one particle with negative mass that first people dismissed, but later it was understood as the representation of positron. How to distinguish between really wrong solution and “wrong as current understanding”, that’s a whole different level of problems.

The second point was really a revelation for me. Whatever equations we have, they all just describe things to a certain level. If we can look closer, we often find differences from the theory, that are harder and harder to explain as we get closer. On the other hand, using intuition and physical understanding, people often choose to ignore certain parts of the situation, or certain features of the problem since it cannot affect the results to a level that would be observed in the given experiment. This makes everything solvable, and once solved, one can advance on top of the new understanding even deeper into the problem. Finding the good approximations is almost as valuable as finding the right theory, that’s why often these approximations are named after the people who came up with them, or given other shorthand names so everyone can quickly recall and use them.

There’s a whole methodology built to help come up with approximations and handle them, called perturbation theory: if the given problem is very similar to a simpler, already solved problem, then treat it as the simple one plus some small effect that changes relatively little on the behaviour of the system. Not everything can be handled like this, but surprisingly many problems fit very well.

Others’ Two Things about Physics

On the original site there were other people’s Two Things as well:

1. Energy is conserved.

2. Photons (and everything else) behave like both waves and particles.

-Tim Lee1. Draw a diagram.

2. Get the dimensions straight.

-Eric Schafer

I personally don’t like these that much. The first one is just stating two theories that can be superseded in the future, and right now they kind of limit instead of enable. The second one is good advice, but can’t say that’s the only thing there about physics. Having said that, I have more adventures with incorrect dimensions and units than I’d prefer to have.

What other Two Things choices one can make, in physics or in other sciences, other topics?