I might have gone a bit overboard with this. Again. It all started when I was reading how the Taiwanese government is planning to implement a fuel subsidy. It bothered me because:

- I’m not a big fan of subsidies, since then people cannot make decisions based on the real cost of things

- Subsidy comes out of someone’s pocket anyway, so ultimately everyone does pay for it

- Looking at the governing party‘s track record and the nearing elections (<1 year), this is likely to be politically motivated

- Fuel is pretty cheap compared to other places already, so it must be already heavily subsidized.

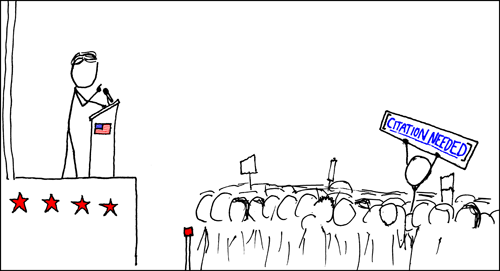

The points 1, 2 and 3 are opinions and generalizations. I had to realize that point 4 I just thrown in there without knowing whether it is really true – I just believed it is. Now here’s a good example for [Citation needed].

Filling in the blanks

Yeah, what do I really know? I lived in Hungary and Britain before, and I remember there fuel was more expensive then in Taiwan. Also, it’s open knowledge that the US is pretty cheap, compared to most of the world, that is… But are there any patterns in the price, and what would I expect Taiwan’s level to be?

I was checking around for a while, fishing for the right keywords for the search (“fuel” / “petrol” / “gas”, depending where one’s from, as a starter). Found a couple of sites but they were mostly looking at price comparisons within a country (like Petrolprices for UK) or within a region (like AMZS for Europe, works weird – no real static link, click the UK flag then “fuel prices” in the menu on the left). After a while, however, I did come across a German organization, Deutsche Gesellschaft für Internationale Zusammenarbeit (GIZ) which in fact had a study about international fuel prices. Go ahead and check the 2009 version, it is very interesting to see all that data and some analysis as well. Historical trends, relative prices, some case studies too. I would have loved to see more analysis, but maybe next time, when I’ll be in the in a position to commission such research. :)

Anyway, looking at the Taiwanese data in from 2009, diesel is slightly subsidized while gasoline is, which means that they are somewhat – but not too much – below and above the US price, respectively. In the ranking, Taiwan is very much on the cheap end.

While looking around a bit more on their site, they have a Data preview for 2010/2011 as well. The more recent is the data the better. Took a look at that too. Now Taiwan is a bit above of the US price with both types of fuel, but still on the cheap end. All their data was in a picture, though, that’s not very handy… So did a little data entry, resulting in this datafile.

I was also thinking, whether the economy affects the prices, and if does then how? Wikipedia first for GDP/capita for all the countries of the world, but they were taking the data from the International Monetary Fund, so let’s go to the source. I checked out their data export tool and there were quite a few more fields to choose from. I went with GDP per capita, Implied purchasing power parity, Value oil exports, Value of oil imports (these last two are good catch:). The output is here. The bottom of the page has a download link, downloaded it into this file without modifications.

Next, had to write some analysis code for the whole thing as well, converting the data into suitable database, fixing some errors in the primary database’s data formats. So in the end I had a simple little script that does:

- Clean up some of the names (some Unicode errors originally), and fixes one: I do want to convert the original “Taiwan Province of China” into “Taiwan”…

- Fix formatting errors in the IMF data: they used number formats of “12,345.00” instead of just “12345.00”

- Fill missing values with “-1” so it’s easy to filter out later

- Rank countries by fuel price, ignore countries that have missing economic data or missing price data

- Combine all this and print out on the console

(Scripts and data are shared in this git repo.)

Taking a look

I was looking around for some useful visualizer – something that can handle this much data better then an ordinary static plot. Fortunately, Highcharts JS seems to just the right thing…

The first plot I wanted to reproduce is the one from GIZ’s Data preview. Let’s see how it works out:

Instructions: “red”/”blue” countries are net oil exporter/importer respectively, hover over any of the lines to see which country it is, can click-and-drag zoom into area…

So yeah, Taiwan is down at 49 out of 161 countries, and just few net-importer (blue) countries ranking higher. Even those are mostly poor ones.

Now the second picture, how do fuel prices compare with GDP/capita – which I naively think to have some connection to economical power:

Instructions: note that the GDP scale is logarithmic, hover and zoom are the same as before.

Might be just my eyes, but it seems to me that there are two lines on this plot if one ignores the net exporter (red) countries for a second. From the middle to the right prices are increasing: the wealthier countries can pay more for the fuel. On the other hand, from the middle to the left prices are increasing again: poorer countries cannot really afford it. The cheapest (nominally) are the middle-to-poor, $1000-5000 GDP/person countries.

Bottom line: Taiwan is there at ~$18000/¢100, and if there are indeed these lines, then Taiwan is waaaay below the wealthy country line. Based on the economy, the price should be closer to ¢150. This suggest to me that the original assessment was correct: Taiwanese fuel is cheap.

Global Price API

All of this data-hunting and conversion and plotting should not be this much of a pain. I have a feeling there’s need and desire for open access for such information and that transparency would help people’s decision making – whether those people are in charge or part of the public (and should be “ultimately in charge”). Of course, I’m not the only one to say this, and I’m not even a very good one making this happen – just check out Hans Rosling’s TED talk.

I was thinking, how to build a globally accessible database of consumer prices? Fuel is a good choice because it’s universally needed and there are not too many kinds, one can compare apples to apples. On the other hand, there could be other items as well. Maybe recruit a few volunteers from a big bunch of countries so periodically they add more info to a database. Or fully crowdsource it, maybe even the item categories as well? Then build an interface that it can be easily queried and used by programmers and non-programmers as well. Or is there any such database already? Pitching version: “Archive.org for global price and other public data”. Not that I have a business model for this… I’m sure I’d prefer the same as SimpleGeo and completely open the data itself, but I know there are people who will still find opportunities – or make some.

Any thoughts on this? One selfish thought I have is that this would be lovely so I never ever again have to manually enter all the names of all the countries of the world. :) But I do believe there’s much more to this project.